Classification Algorithms

Dataset Introduction

NSL-KDD

The NSL-KDD dataset is a dataset for intrusion detection, which is a type of supervised learning problem. It consists of a large number of network traffic records that are labeled as either normal or malicious. The dataset contains a total of 41,478 network traffic records, which are categorized into 10 different types of attacks, such as DoS, Probe, U2R, R2L, etc. The dataset is publicly available and can be downloaded from the following link: https://www.unb.ca/cic/datasets/nsl.html.

Processed Combined IoT dataset

The processed combined IoT dataset is a dataset for anomaly detection, which is a type of unsupervised learning problem. It consists of a large number of IoT sensor data that are labeled as normal or abnormal. The dataset contains a total of 1,000,000 IoT sensor data records, which are categorized into 10 different types of attacks, such as DoS, Probe, U2R, R2L, etc. The dataset is publicly available and can be downloaded from the following link: https://www.kaggle.com/uciml/iot-sensor-dataset.

https://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=9189760

Classification Algorithms

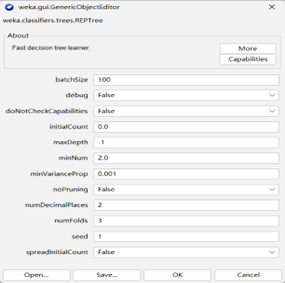

Decision Tree

Decision Trees, also known as Classification and Regression Trees (CART), construct a hierarchical tree structure to make predictions. The algorithm splits data at the most optimal points, iteratively refining its predictions. After constructing the tree, pruning techniques are applied to enhance generalization to new data. For this dataset, the Decision Tree algorithm achieves exceptional results, reflecting its ability to handle binary classification tasks effectively and its robustness against overfitting.

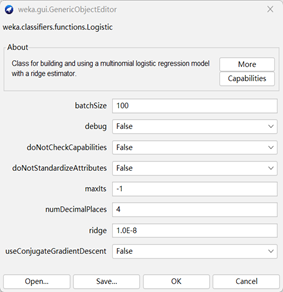

Logistic Regression

Logistic Regression is a binary classification technique that assumes numeric input variables with a Gaussian distribution. Although this assumption is not mandatory, the algorithm performs well even if the data does not adhere to this pattern. Logistic regression calculates coefficients for each input variable, combining them linearly into a regression function and applying a logistic transformation. While it is simple and fast, its effectiveness depends on the characteristics of the dataset. For this dataset, logistic regression may face limitations due to the non-Gaussian distribution of many attributes.

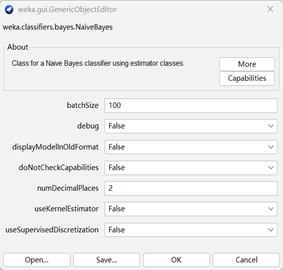

Naive Bayes

Naïve Bayes is a probabilistic classifier that assumes conditional independence between variables. While this assumption is unrealistic in practice, it simplifies calculations and enables efficient predictions. Naïve Bayes calculates the posterior probability for each class and predicts the class with the highest probability. Despite its simplicity, Naïve Bayes can be surprisingly effective, particularly for problems with nominal or numerical inputs assuming a specific distribution. Its performance on this dataset might be hindered by the strong interdependence among variables.

K-Nearest Neighbors (kNN)

The k-Nearest Neighbors algorithm is a non-parametric technique that predicts outputs based on the k most similar instances in the training dataset. kNN does not build a model; instead, it relies on the raw dataset and distance-based computations during prediction. This simplicity often results in good performance, particularly for datasets where the similarity between instances is meaningful. However, kNN may be computationally expensive for large datasets and sensitive to noise in the data.

Random Forest

Random forest is a type of ensemble learning algorithm that can be used for both classification and regression problems. It is a collection of decision trees that are trained on random subsets of the data. The algorithm combines the predictions of each tree to make a final prediction. The algorithm can handle both categorical and numerical data.

Support Vector Machines (SVM)

Support Vector Machines are designed primarily for binary classification, though extensions support multi-class classification and regression. SVM finds an optimal hyperplane to separate data into two groups, using support vectors to define the margin. For datasets that are not linearly separable, SVM applies kernel functions such as Polynomial or RBF (Radial Basis Function) to transform the data into higher dimensions. In this dataset, SVM achieves competitive results, particularly with well-chosen kernel functions, demonstrating its ability to handle complex decision boundaries.

Multilayer Perceptron

Multilayer perceptron (MLP) is a type of supervised learning algorithm that can be used for both classification and regression problems. It is a feedforward artificial neural network that consists of multiple layers of nodes. The algorithm trains the network by adjusting the weights of the connections between the nodes. The algorithm can handle both categorical and numerical data.

Evaluation Metrics

Accuracy

Accuracy is the most commonly used evaluation metric for classification problems. It is the ratio of the number of correct predictions to the total number of predictions.1

Accuracyi = (𝑇𝑃𝑖 + 𝑇𝑁𝑖) /𝑇𝑜𝑡𝑎𝑙 𝑆𝑎𝑚𝑝𝑙𝑒s

Precision

Precision is the ratio of true positives to the total number of true positives and false positives.

1 | 𝑃𝑟𝑒𝑐𝑖𝑠𝑖𝑜𝑛𝑖 = 𝑇𝑃𝑖 / (𝑇𝑃i+FPi) |

Recall

Recall is the ratio of true positives to the total number of true positives and false negatives.

1 | Recalli = 𝑇𝑃𝑖 / (𝑇𝑃i+FNi) |

F-score

F-score is the harmonic mean of precision and recall.

1 | F-scorei = 2 * (precisioni * recalli) / (precisioni + recalli) |

False Alarm

False alarm is the ratio of false positives to the total number of false positives and true negatives.

1 | False Alarmi = FP𝑖 / (𝑇𝑁i+FPi) |